Hi. This is the channel for www.jollamind2.com development progress and any explanations about this new assistant box for jolla phones or sailfish os phones.

Here I post a new video from ‘https://venho.ai’ https://m.youtube.com/watch?v=FxlLicL7Fnk

Here is also an interesting post of the jolla related assistant AI box Jolla Mind2: Your private AI, Your control #privacyfromfinland #jollamind2 #takecontrol - WTFAYLA with Antti Saarnio, co-founder of Jolla and founder of @Venhoai.

See also here:: https://m.youtube.com/shorts/mf1czn2NKyw

Jolla is hoping to find success with the Mind2, a single-board computer designed to run LLMs in a box for improved privacy.

See also https://youtu.be/YCOgIbFwq8g?si=RSv9NuHy7A0KuGNg

Here I have an interesting post from Jolla on LinkedIn: Jolla on LinkedIn: Introducing Jolla Mind2: Your Private AI Computer Powered by Venho.Ai

it makes zero sense for it to be a piece of hardware. An app on hardware you already have and carry around would work the same.

@tmindrup, please de-spam your links before posting, e.g. https://www.jollamind2.com/ instead of .https://www.google.com/url?sa=t&source=web&cd=&ved=2ahUKEwiV0533xNiHAxXV1AIHHenTEq0QFnoECA8QAQ&url=https%3A%2F%2Fwww.jollamind2.com%2F&usg=AOvVaw1qt4MtrorIC5_uEmVbhX_3&opi=89978449

These yield exactly the same, but do not provide Google (Alphabet), YouTube (also Alphabet) etc. with information how the link was spread, used by whom etc., which is not at all privacy-friendly (for you and those who click on these links).

Edit: Thank you very much for reducing these links to the necessary link-elements. Now there is a single one remaining.

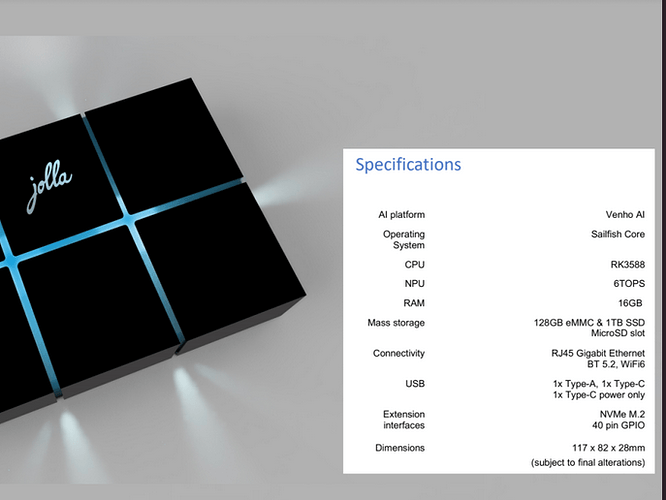

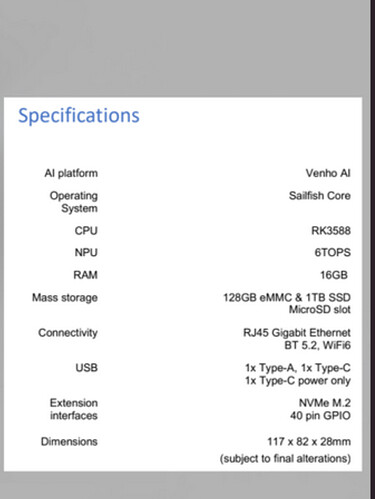

I think the ML Models, to “answer” fast, utilize the specialized NPU, which might be available in some high end smartphones, but not in the Xperia 10’s AFAIK. So the specialized hardware has at least 6TOPS on an NPU. Also the RAM requirements are usually high. So I do not carry that hardware all the time. Also I think, its not a bad Idea to have a HW running that “at home” and not in “the cloud”.

Which HW do you carry around, that is optimized for ML-Models?

a current Snapdragon or Tensor SoC outperforms this by far. it triggers an aswer from an external AI anyway, all you need is a framework on any device.

From my understanding the Mind2 shall in most use cases process the request locally - so no external request if not explicitly asked for. And the User Interface for the Mind2 is device independent, isn’t it?

However, I do not carry a Tensor SoC and don’t know what you mean with SD.

So, ok, running something on a Desktop or other specialized HW at home might be an alternative for a bit more “trustworthy AI”.

From a housewife’s point of view, for what shall this AI device be good?

A few - and, maybe, noob - questions: is possible to use mind2 like a PC? Can I use it in other linguages (ex. Portuguese?)

Snapdragon. Your average midrange 7 year old snapdragon SoC even outperforms this rk3588. even a N100. This is one of the cheapest ARM SoC and the jolla mind2 can do nothing what a 15€ RasPi couldn’t do. Sorting emails, calendar etc… if you ask me it’s a scam. Their refusal to make it an app is a joke.

What are you comparing, CPU performance?

EDIT: I tried to find some numbers on the NPU Performance in ML Models, which is difficult, but shows quite differences to running models on Raspberry Pi CPUs, e.g. https://www.reddit.com/r/OrangePI/comments/1annxuk/comment/kyp9nfo/?utm_source=share&utm_medium=web3x&utm_name=web3xcss&utm_term=1&utm_content=share_button - yeah that is random people writing stuff. I can’t judge.

- No, only Qualcomms’s current high-end Snapdragon SoCs (8 series) outperform an RK3588, their current midrange SoCs (6 and 7 series) offer roughly the same performance (depending on the application, e.g. if its memory intensive), their low-end SoCs (4 series) are a lot slower; for details, see below.

- No, Jolla’s Mind2 is supposed to do all ML processing locally (i.e. on this device), that exactly is Jolla’s promise. Microsoft’s “Copilot+” basically does the same, but might reach out to various cloud services of Microsoft.

This is clearly not true:

-

The SoC of the RasberryPI 5 (released in 2023), the BCM2712 comes close but is not on par with the RK3588:

- No TPU (Tensor Processing Unit), nowadays often called “NPU” (Neural Processing Unit).

- No little CPU cluster, comprising quad-core Cortex-A55 on the RK3588.

- The BCM2712 is built using a cheap, old 16 nm process, while the RK3588 is built on TMSC’s 8 nm LPP (Low Power Performance) process, which allows for using less power and / or clocking it higher.

- The BCM2712 has a 32-bit LPDDR4/4x DRAM interface (two 16-bit channels), while the RK3588 has a 64-bit LPDDR4/4x/5 DRAM interface (four 16-bit channels) hence at least twice the memory bandwidth.

- The BCM2712 is minimalistic with regard to built-in peripherals (as all Broadcom SoC) due to being designed for a single application (being used in RasberryPis) while most Rockchip SoCs including the RK3588 offer an extremely rich set of peripherals due to aiming at a broad spectrum of embedded applications (even surpassing Qualcomm’s Snapdragons, which are aimed at mobile phones, tablets and light laptops).

- Broadcom is well known for designing their chips and accompanying software with minimal efforts, which results in faulty, insecure and sometimes outright broken functions. They also very rarely fix flaws by releasing new revisions of their chips.

- Broadcom’s “Videocore” GPUs are pieces of shit: very slow, hard to program (i.e. to write and maintain drivers for)

- The RasberryPi5 is only available with 4 GByte or 8 GByte RAM, in contrast to Mind2’s 16 GByte.

- A RasberryPi5 with 8 GByte RAM costs about $ 80,- (not $ 15,-).

See also this Wikipedia entry and the full RK3588 datasheet.

The A76 “big” CPU cores were released by ARM in 2018, designing the RK3588 started in 2020 and it is in production since 2022, hence it is a quite modern SoC.

-

An Intel N100 (comprising four “Gracemont” CPU cores) processor is well comparable to the RK3588:

Both have 64-bit memory interfaces (N100: one 64-bit channel DDR4/DDR5/LPDDR5), the GPUs are roughly comparable, the N100 offers slightly more CPU performance, but lacks a TPU (“NPU”), the N100 is built using Intel’s “Intel 7” process (previously referred to as Intel 10 nm Enhanced SuperFin “10ESF”) and was released in early 2023. -

A Snapdragon 855 (Qualcomm’s high-end smartphone SoC released 2019) is very well comparable to the RK3588. Qualcomm implements their TPU functionality on their Hexagon DSPs, hence they very early offered explicit tensor processing (with the Hexagon 685 in 2018 which provided a mere 3 TOPS).

Qualcomm’s upper midrange SoCs (Snapdragon 7 series) all have only a 32-bit memory interface (two 16-bit channels), so do their lower midrange SoCs (Snapdragon 6 series) since 2018. The low-end Snapdragon 4 series SoCs only have a single 16-bit channel memory interface, as did Snapdragon 6xx SoCs before 2018. BTW, the latest Snapdragon 4 SoCs lack a Hexagon DSP at all (i.e. they do all audio- and video-de/encoding etc. on the CPU).

Thus “No”, no “average midrange 7 year old snapdragon SoC even outperforms this rk3588”, 7 years ago all of Qualcomm’s midrange SoCs did not even offer TPU functions, and in terms of CPU, GPU and memory performance the RK3588 also clearly beats any “midrange 7 year old snapdragon SoC”.

Their refusal to make it an app is a joke.

Well, exactly that is their business model. ![]() Telling people that the data on a box connected via network (Ethernet, WiFi etc.) is much more safe than on a smartphone.

Telling people that the data on a box connected via network (Ethernet, WiFi etc.) is much more safe than on a smartphone.

But it is definitely not a scam.

The RK3588’s 6 TOPS for Int8 tensors is in the same ballpark as the first PC / Laptop processors with built-in TPUs: AMD’s Ryzen 7000 and Ryzen 8000 desktop and laptop processors (released in 2023) with TPUs (“Ryzen AI”) offer between 10 and 16 TOPS, while the TPUs in Intel’s brand new (2024) Meteor Lake-based Core Ultra CPUs top out at 10 TOPS.[1]

This is much faster than running ML models in software on a CPU and faster than running them on an integrated GPU.

But for having a device certified for Microsoft’s “Copilot+” (which addresses exactly the same use cases on a PC / laptop as Jolla’s Mind2 does in an external box), it has to offer 40 TOPS locally, which only brand new CPUs are capable of: Qualcomm’s “Snapdragon X Elite / X Plus” (40 - 45 TOPS, mid 2024), AMD’s “Ryzen AI 300 series” (50 - 55 TOPS, July 2024), Intel offers nothing comparable.

My summary is: The Mind2 is the best Jolla could possibly achieve when they started designing the hardware (Qualcomm hardware is not a good choice when one cannot order in batches of at least 10.000 SoCs), ultimately the software is decisive for what functionality Jolla’s Mind2 offers to end users; if the SoC is performant enough for that largely depends on the software and what exactly it is used for (i.e. which tasks, kind of data and amount of data).

Yes, but it offers no display connectivity and you pay a lot for Jolla’s software stack. You might better buy a cheap desktop PC (“Chinese box”), or an RK3588 board and an enclosure for the money, and install a Linux distribution of your choice on it.

Can I use it in other linguages (ex. Portuguese?)

I would expect Jolla’s ML software to support entering prompts and classifying texts in various languages, but properly training it for a large variety of languages is time-consuming, so do not expect that to work well right from the start.

If Jolla is smart, they provide the infrastructure for “the community” to perform this task, as they (uniquely) did for translating strings for SailfishOS. Well, as this is initially more work to employ this infrastructure in a way which is usable for everyone and Jolla’s smart moves are rare, I do not expect much, but would be glad to be positively surprised.

P.S.:

Again (now someone else), please do refrain from posting spam-links (this addresses the link itself, not the link target!).

Thanks for showing how homework should be done. I admit I am myself lost in these details and really hope you are right in that they chose the most cost effective and efficient way and not any less than that.

I knew that some of the statements I ended up refuting were nonsense, but had to look up many of the details in order to make concise and well founded statements.

Well, it was Sunday and I would never ever use cloud-based ML applications for use cases which involve my personal data (pictures etc.). But the fact that some capable ML software has recently become available as Open Source Software (among them the one Jolla uses for their Mind2) and that providing significant tensor processing performance locally is about to become easily available without a GPU / TPU card for many hundreds of dollars, which consumes at least 150 W when performing serious calculations while sounding like a vacuum cleaner, caught my interest a little while before Jolla announced the Mind2. Hence it was interesting to research the current status quo of TPUs and their performance in various CPUs / SoC.

I admit I am myself lost in these details and really hope you are right in that they chose the most cost effective and efficient way and not any less than that.

For Jolla, definitely. But their “window of opportunity” is small: All recent Qualcomm SoCs (except for the low-end “4 series”) offer at least basic tensor processing capabilities (the current high-end “8 series” models much more than that), so do the aforementioned x86-CPUs (except for Intel, they missed the departure of this trend to run ML apps locally), hence the question why Jolla does not offer their ML application as a stand-alone software is reasonable. Well, they still can do that if their “Mind2” ML-appliance fails, so they still may get their ROI (return of investment) for the software development; the RK3588 board they use is likely designed and built by some else, anyway, so they did not invest much on the hardware (probably only the enclosure).

TL;DR

Time to market with a well working “Mind2” ML-appliance which provides easy-to-understand, easy-to-use and unique benefits to its users is crucial for Jolla.

One of the Devs in the Jolla Mind 2 Discord also talked about connecting your own local LLM. That you then can run on another Server/PC in your Homenetwork. There was also a discussion about adding a WOL function for this so that the other PC/Server is only on when needed for extra Power.

The Jolla Mind 2 is also made by Reeder just like the Jolla C2 (and it is most likely a custom Board and not something of the shelf. I myself did not find any other board that is close enough from the Port Layout etc. So I would guess that the Dev version is just a IO Board like there are many for the Raspberry Pi Compute Module but for the RK3588 to cut some costs for Hardware Development.)