Sailfish OS update from Jolla

On today’s fortnight we find that developer tools are step-by-step moving forward and once again we are doing this together with you, Saiffish OS community. Further, we have a roundup of AI-based apps for the Sailfish OS. Hats off to the authors of the featured apps – great stuff and reading.

Please also enjoy latest Sailfish OS Sauna release that we rolled to all users right before Juhannus. This great milestone was achieved together with you all, big thanks! We want to wish you all relaxing summer time  .

.

Have a cup of coffee or tea and enjoy this fortnight!

Repository roundup

The developers will be satisfied to notice that improvements are coming in the build chain. GCC has recently been upgraded to version 10, and mal is already working on moving to version 13. direc85 also worked on upgrading the build chain for Whisperfish, bringing Rust to version 1.79 and LLVM to version 18. Even the Autotools received an upgrade.

Communication bits

-

libqofono, the QML bindings to Ofono, jmlich noticed and fixed some issues in the XML formatting of the D-Bus interface. -

buteo-syncml, the SyncML plugin, sunweaver improved the testing parts by using the$TMPDIRenvironment variable instead of hardcoded paths, and also fixed typos in warning messages. -

libcommhistory, the library to access SMS/phone history, dcaliste introduced a new class to hide D-Bus machinery from the expose API of the library. -

commhistory-daemon, the daemon handling call and message history, dcaliste removed the direct D-Bus listeners from the MMS helper program and pvuorela fixed it also in another place of the code. -

bluez5, the Linux Bluetooth stack, mal moved to version 5.76. -

voicecall, the open source part of the calling application, dcaliste slightly improved the process of exposing current call to D-Bus. pvuorela fixed warnings when sending DTMF tones. -

messagingframework, the email Qt framework, dcaliste and pherjung noticed a memory corruption when using'\'or'"'characters in passwords. -

connman, the connection manager, Laakkonenjussi upgraded it to 1.36 and fixed the iptable extension with this new version.

Calendar bits

-

nemo-qml-plugin-calendar, the QML for calendar access, dcaliste fixed a lag issue when the calendar database is quite slow. Before the fix, opening an event could take seconds to proceed. pvuorela consistently changed the private members to use them_namingconvention.

Multimedia framework

-

imagemagick, a multi-purpose image manipulation tool, mal updated it to 6.9.13-11.

User interface

-

maliit-framework, the code handling the keyboard, mal updated it to 2.3.0. -

nemo-qml-plugin-systemsettings, the QML bindings for global settings, keto fixed the output of the WLAN MAC address when this one is ill-formatted. -

qmf-notifications-plugin, a plugin to send notifications on email changes, dcaliste proposed to drop unread notifications from the event screen for emails, as soon as such emails are opened in the mail application and not when the process of propagating this read status to the server complete. This allows to keep the notification around when reading emails off-line.

Low level libraries

-

xz, a compression library, mal updated it to 5.6.2. -

poppler, the PDF rendering library, attah is working on an update to version 24.06.0. -

libvpx, a VP8/VP9 video codec library, mal updated it to 1.14.1. -

nss, network security services from the Mozilla foundation, mal updated it to 3.101. -

dbus, the famous interprocess passing daemon, mal updated it to 1.14.10. -

gmime, a library for creating and parsing MIME messages, mal updated it to 3.2.14. -

ssu, the seamless software updater, pketo added the ssu domain into the repository properties and fixed the setting of the flavour for repositories.

Developer’s corner

-

ncurses, the library to use terminals, mal updated it to 6.5 from 6.3. -

gcc, the GNU compiler collection, mal is working on an upgrade to version 13. Before it can be pushed, using the new GCC highlights some possible bugs and raises compilation issues in various repositories that need to be addessed first:- in

buteo-sync-plugins-social, - in

nemo-qml-plugin-contacts, - in

sailfish-secrets, - in

scratchbox2, - in

sailfish-browser, - in

gecko-dev, - in

nemo-qml-plugin-systemsettings

- in

-

fuse3, utilities for the filesystem in userspace, mal updated it to 3.16.2. -

mpfr, a library for multiprecision calculations, mal updated it to 4.2.1. -

autoconf, the infamous GNU build system - automatic system detection, mal is proposing to upgrade it to 2.72 (from 2.71). It also requires to adjust some other project for the new version:- in

gpgme

- in

-

sed, the command-line string manipulation tool, mal updated it to 4.9. -

strace, the IO tracing tool, mal updated it to 6.9. -

contactsd, Telepathy <-> QtContacts bridge for contacts, sunweaver proposed to fix some file inclusion issues in tests. -

rust, the Rust programming language compiler, direc85 is proposing an upgrade to 1.79. -

llvm, the machinery to build compilers, direc85 has investigated the upgrade to 18. -

sdk-build-tools, scripts used to build the Sailfish SDK in its production environment, martyone proposed an upgrade to base the tools on Ubuntu 22.04 for the host parts. -

util-linux, a collection of basic system utilities, Thaodan modified the spec file to go in the direction of having all tools under/usr. -

sailfish-qtcreator, the Qt IDE, martyone fixed issues when used on Ubuntu 22.04 and upgraded internal GDB to 12.1.

App roundup

With Juhannus and the longest day still in recent memory and with a long hot summer stretching out ahead of us, it’s time for the final app roundup before the summer break. So with Jolla introducing its own flavour of AI for the privacy-conscious, and with many people taking the opportunity to experience far flung places over the summer, what better way to wrap up today’s newsletter than with a roundup of AI-based apps for Sailfish OS, including a couple that may turn out to be super-useful for communicating with the locals while you’re away from home.

Nobody can have avoided the discussion around how AI tools are going to revolutionise society, in both good and bad ways. And Sailfish OS has experienced its own bounty of AI tools recently. Speech recognition, translation and optical character recognition are all now well-served on Sailfish OS as the result of highly capable machine learning models being made easily available for use either locally or via online services. Today we’re going to look in more detail and some of these tools.

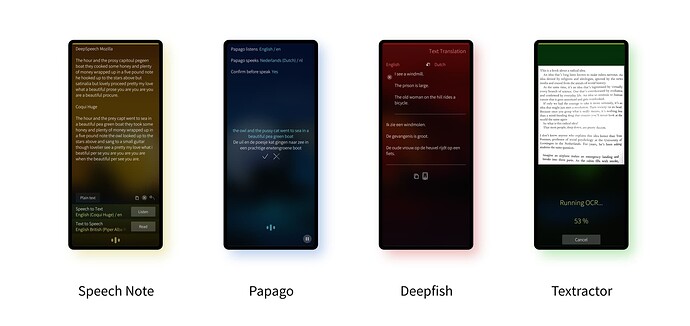

First up in our roundup is a trio of apps from Michal Kosciesza (mkiol). Michal’s Speech Note, Papago and Speech Keyboard all work closely together to provide a very effective set of tools for speech input, speech output and speech translation.

Michal is to be applauded for how professional the apps feel, with crisp and clean user interfaces, an astonish breadth of configurability and impressively clean integration with Sailfish OS itself.

Let’s take each app in turn. At the heart of the suit is Speech Note, which just by itself provides access to the three main capabilities provided by the AI models it incorporates: speech to text, text to speech and language translation.

The app offers two main modes. The first a notebook style interface that, with a tap on the “Listen” button, allows you to read in passages that the model will then convert into text. The models run locally so that all of your secrets stay on-device and processing is surprisingly swift inspite of this. You can also type text directly in, or load text from a file. Once you’ve got your text, a subsequent tap on the “Read” button will trigger the app to read your words back to you in one of the many available voices.

The second mode changes the layout to offer two panes, arranged either horizontally or vertically depending on the orientation. This is translation mode, with the additional option to convert the text in one of the panes into a different language in the other pane.

Visually, all three apps use the nice three line audio wave symbol to indicate that they’re listening, alongside a similarly clear four-dot spinner when they’re thinking. The app is really great to use and nicely presented.

But all of this high quality app design will be for nought if the language models don’t do a good job at understanding your words. There are so many different available models and voices that it’s impossible for me to give a comprehensive view of them all, but trying several of the models I found that the quality varied quite widely. That’s not so surprising, given that in many cases the differentiating factor isn’t how the model was trained, but rather how many parameters the model has.

For speech to text I tried out Mozilla’s DeepSpeech model and wasn’t especially impressed. It struggled to turn my words into coherent sentences; if I were to use it in earnest I think I’d spend more time correcting it than if I’d just typed the text in directly using the keyboard from scratch.

The Coqui Huge model, on the other hand, produced much better results. Still not perfect, but it felt good enough to be usable for real work. The improvement isn’t so surprising when you consider that Coqui is a continuation of DeepSpeech, forked after Mozilla dropped support for it. Sadly Coqui is also now no longer supported; as an open source codebase maybe there’s still a chance it will get picked up again though.

Still, the app supports numerous other models, including Whisper from OpenAI, Vosk from Alpha Cephei and the April-ASR variants of the Icefall project models.

In the opposite direction, I found the Text to Speech quality, at least for English, to be incredibly high quality for an open source project. It sounded far better than the more robotic sounding output that I’m used to from Mimic or PicoTTS for example.

Version 4.5.0 of Speech Note is a superb app and available from OpenRepos.

Let’s move on to Speech Keyboard. Much more unobtrusive, Speech Keyboard integrates beautifully with Sailfish OS. After installing the app there’s no visible way of making use of it, until you check in the available keyboards under Text Input section of the Settings app. There you’ll find many languages now appear twice, once as the usual keyboard, then again with a little “Speaking Head”  emoji alongside it. Choose one of these new options and you’ll find a new keyboard variant is available. Instead of the usual suggestions bar you’ll now find Michal’s three-bar speech-to-text icon. Press it, speak into your phone, and the keyboard will automatically type it out for you. The model used is the same as that selected as default for the Speech Note app, which you’ll also need installed in order to download and configure the models. That means you get the same quality of recognition, depending on which model you’ve chosen.

emoji alongside it. Choose one of these new options and you’ll find a new keyboard variant is available. Instead of the usual suggestions bar you’ll now find Michal’s three-bar speech-to-text icon. Press it, speak into your phone, and the keyboard will automatically type it out for you. The model used is the same as that selected as default for the Speech Note app, which you’ll also need installed in order to download and configure the models. That means you get the same quality of recognition, depending on which model you’ve chosen.

Version 1.6.0 of Speech Keyboard is available from OpenRepos.

The final app in the suite is the Papago translation app. This wraps up the functionality from the Speech Note app into an almost real time communicator. We’ve not quite reached Babel Fish levels of seamless translation, but it’s honestly not far off. Speak in one language and a few seconds later the app will translate your words and speak them out to whoever you’re in conversation with. In theory it should be possible for two people sharing no common language to converse just using this app.

As with the other two apps in the suite, Papago — currently at version 2.1 — is available from OpenRepos.

As you can probably tell, I’m quite impressed by all three apps. The app design is high quality, they’re highly configurable and with a large range of different models to choose from. What’s more, all of the inference happens on-device, so no need to send data to an external server.

Another AI model-based translation tool that’s worth highlighting is Deepfish from jojo. On the face of it the app is quite similar to Speech Note when configured for translation mode, but underneath things are quite different. Deepfish makes use of DeepL’s online translation service, also machine-learning based, but operating on DeepL’s own servers.

There are pros and cons to both approaches: some may baulk at the loss of privacy inherent in sending data to an external service. On the other hand, the quality of DeepL’s translation is very high and likely to improve over time, independent of development of the app itself.

In the translation tests I tried the results were indeed excellent. The app is simple to use and fully text-based, so you’ll have to type in and read out the results “manually”. That’s not necessarily a bad thing of course, it very much depends on what you’re trying to achieve. DeepL is a commercial service, but an API key that will allow up to 500 000 characters of translation per month is available for free from the DeepL website.

I really liked the simplicity and effectiveness of Deepfish. I also like the fact that we have choices offering distinct benefits and features. Version 2.2 of Deepfish is currently available from OpenRepos.

The final app I want to talk about today is the Textractor app, currently maintained by Sebastian Matkovich (aviarus) but originally developed by Olli-Pekka Heinisuo. It allows you to take an image, input through one of the three supported approaches, and to convert text shown in the image into actual text that can be copied out and used in other applications.

The three ways to enter an image are either by using the in-built camera capture, by selecting an image from your photo gallery, or by selecting a PDF file you already have on your device. As you might expect the first approach gives you a viewfinder to take the photo, which was fine apart from the orientation detection, which seemed to insist my image be in landscape mode. Taking a photo with the camera app allowed me to work around this and, in practice, worked extremely well. Having selected a photo you’re then requested to delineate the area for analysis by dragging the corners of a quadrilateral. The OCR works best when text is in a clearly aligned block, so while this was a little fiddly, it should allow for much better results. Having the area selectable in this way makes performing OCR on text in a column-based layout far more practical.

For high-contrast text using a standard font I found the OCR worked extremely well. I was able to lift pages from a paperback book with very high accuracy. For handwritten text or cursive fonts, I got much more varied results. Given that the OCR is happening entirely on-device, I was nevertheless very impressed that it worked so well. Performing OCR on a single page took about a minute, which also felt reasonable for on-device conversion.

The fact you can pull PDF files directly in to the app is also a really neat feature.

Version 0.7 of the Textractor app is well worth trying out and is available from OpenRepos.

I had hoped to also include the ChatGPT app from Dominik Chrástecký (Rikudou_Sennin). We already covered this back in May 2023 and back then the app worked extremely well. Unfortunately due to changes in the way OpenAI allow access to their API, I wasn’t able to get a successful result this time around. We’ll come back to it in a future newsletter and I’ll try to get better results. In the meantime, if you’d like to give it a go yourself, version 0.9.12 is available from OpenRepos.

As you can see, the options for running AI on your Sailfish OS device are already quite varied, offering practical and useful tools. We can only expect this utility to improve over time. But what’s also extremely encouraging, for me at least, is how effective they are even with inference happening entirely on the device itself.

This bodes really well for the future and it’s great, as always, to see Sailfish developers making the most of the opportunities that these new capabilities bring.

That’s it for today, until the next newsletter later in the year, let me take the opportunity to wish you all a glorious and Sailfish-filled summer!

Please feed us your news

The next newsletter will be publish in August. Please continue sharing your ideas for future newsletter topics.

And do also join us at our next meeting community on IRC, Matrix and Telegram on the 4th July. It’s a great place to discuss any of the content you see here, ask questions and share your ideas. Thinking is that community meetings would be as well paused for month of July.